情感分析目前比较流行的方法有两种,一是词库法、二是机器学习法。机器学习法则是在已知分类语料的情况下,构建文档–词条矩阵,然后应该各种分类算法(knn、NB、RF、SVM、DL等),预测出其句子的情感。

通过词库的方式定性每一句话的情感没有什么高深的理论基础,其思想就是对每一句话进行分词,然后对比正面词库与负面词库,从而计算出句子的正面得分(词中有多少是正面的)与负面得分(词中有多少是负面的),以及综合得分(正面得分-负面得分)。虽然该方法通俗易懂,但是非常耗人力成本,如正负面词库的构建、自定义词典的导入等。

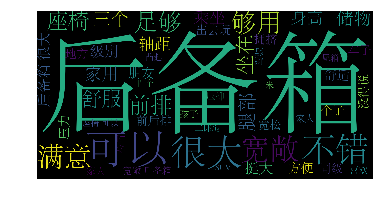

以某汽车的空间评论数据作为分析对象,来给每条评论打上正面或负面的标签:

通过文字云绘制结果可以判断,消费者还是非常认可该款汽车的空间大小,普遍表示满意。

数据及源码地址:链接: https://pan.baidu.com/s/1o8QBDOI 密码: 2xw8